This question on LeetCode took me the whole night to solve, and it's another typical dynamic programming problem. It seems like I have been encountering a lot of DP problems lately.

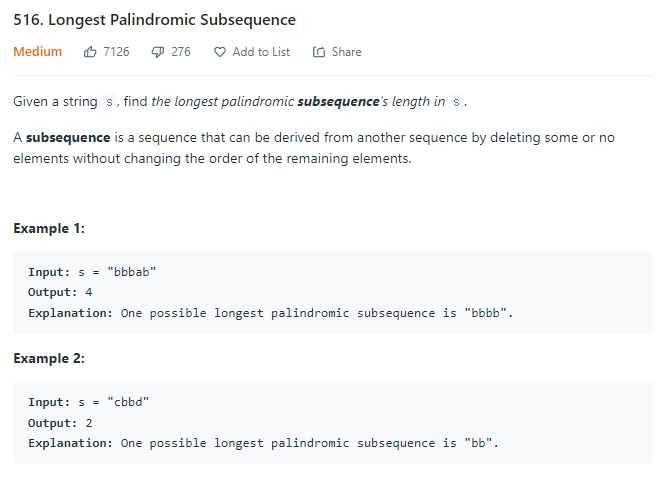

Question

Solution

We create a 2D-array dp, where dp[i][j] contains the answer of the longest palindromic subsequence of the substring formed from index i to j in s. There are three conditions following:

- i == j

THe single-word substring length is always one - s[i] == s[j]

If the first and the last characters match, we can include these two characters in the palindromic subsequence and add it to the longest palindromic subsequence formed using the substring from indexi + 1toj - 1 - s[i] != s[j]

If the first and the last characters do not match, we look for the longest palindromic subsequence in both the substrings formed after ignoring the first and last characters. We pick the maximum of these two

1 | //Time complexity: O(n^2) |